FinGPT: Open-Source Financial Large Language Models https://github.com/AI4Finance-Foundation/FinGPT

FinGPT: Open-Source Financial Large Language Models https://github.com/AI4Finance-Foundation/FinGPT

Preparation steps:

Execute install script:

python3 ljr-build make release

Result (error)

warning: Not a git repository. Use --no-index to compare two paths outside a working tree

usage: git diff --no-index [<options>] <path> <path>

Diff output format options

-p, --patch generate patch

-s, --no-patch suppress diff output

-u generate patch

-U, --unified[=<n>] generate diffs with <n> lines context

-W, --function-context

generate diffs with <n> lines context

--raw generate the diff in raw format

--patch-with-raw synonym for '-p --raw'

--patch-with-stat synonym for '-p --stat'

--numstat machine friendly --stat

--shortstat output only the last line of --stat

-X, --dirstat[=<param1,param2>...]

output the distribution of relative amount of changes for each sub-directory

--cumulative synonym for --dirstat=cumulative

--dirstat-by-file[=<param1,param2>...]

synonym for --dirstat=files,param1,param2...

--check warn if changes introduce conflict markers or whitespace errors

--summary condensed summary such as creations, renames and mode changes

--name-only show only names of changed files

--name-status show only names and status of changed files

--stat[=<width>[,<name-width>[,<count>]]]

generate diffstat

--stat-width <width> generate diffstat with a given width

--stat-name-width <width>

generate diffstat with a given name width

--stat-graph-width <width>

generate diffstat with a given graph width

--stat-count <count> generate diffstat with limited lines

--compact-summary generate compact summary in diffstat

--binary output a binary diff that can be applied

--full-index show full pre- and post-image object names on the "index" lines

--color[=<when>] show colored diff

--ws-error-highlight <kind>

highlight whitespace errors in the 'context', 'old' or 'new' lines in the diff

-z do not munge pathnames and use NULs as output field terminators in --raw or --numstat

--abbrev[=<n>] use <n> digits to display SHA-1s

--src-prefix <prefix>

show the given source prefix instead of "a/"

--dst-prefix <prefix>

show the given destination prefix instead of "b/"

--line-prefix <prefix>

prepend an additional prefix to every line of output

--no-prefix do not show any source or destination prefix

--inter-hunk-context <n>

show context between diff hunks up to the specified number of lines

--output-indicator-new <char>

specify the character to indicate a new line instead of '+'

--output-indicator-old <char>

specify the character to indicate an old line instead of '-'

--output-indicator-context <char>

specify the character to indicate a context instead of ' '

Diff rename options

-B, --break-rewrites[=<n>[/<m>]]

break complete rewrite changes into pairs of delete and create

-M, --find-renames[=<n>]

detect renames

-D, --irreversible-delete

omit the preimage for deletes

-C, --find-copies[=<n>]

detect copies

--find-copies-harder use unmodified files as source to find copies

--no-renames disable rename detection

--rename-empty use empty blobs as rename source

--follow continue listing the history of a file beyond renames

-l <n> prevent rename/copy detection if the number of rename/copy targets exceeds given limit

Diff algorithm options

--minimal produce the smallest possible diff

-w, --ignore-all-space

ignore whitespace when comparing lines

-b, --ignore-space-change

ignore changes in amount of whitespace

--ignore-space-at-eol

ignore changes in whitespace at EOL

--ignore-cr-at-eol ignore carrier-return at the end of line

--ignore-blank-lines ignore changes whose lines are all blank

--indent-heuristic heuristic to shift diff hunk boundaries for easy reading

--patience generate diff using the "patience diff" algorithm

--histogram generate diff using the "histogram diff" algorithm

--diff-algorithm <algorithm>

choose a diff algorithm

--anchored <text> generate diff using the "anchored diff" algorithm

--word-diff[=<mode>] show word diff, using <mode> to delimit changed words

--word-diff-regex <regex>

use <regex> to decide what a word is

--color-words[=<regex>]

equivalent to --word-diff=color --word-diff-regex=<regex>

--color-moved[=<mode>]

moved lines of code are colored differently

--color-moved-ws <mode>

how white spaces are ignored in --color-moved

Other diff options

--relative[=<prefix>]

when run from subdir, exclude changes outside and show relative paths

-a, --text treat all files as text

-R swap two inputs, reverse the diff

--exit-code exit with 1 if there were differences, 0 otherwise

--quiet disable all output of the program

--ext-diff allow an external diff helper to be executed

--textconv run external text conversion filters when comparing binary files

--ignore-submodules[=<when>]

ignore changes to submodules in the diff generation

--submodule[=<format>]

specify how differences in submodules are shown

--ita-invisible-in-index

hide 'git add -N' entries from the index

--ita-visible-in-index

treat 'git add -N' entries as real in the index

-S <string> look for differences that change the number of occurrences of the specified string

-G <regex> look for differences that change the number of occurrences of the specified regex

--pickaxe-all show all changes in the changeset with -S or -G

--pickaxe-regex treat <string> in -S as extended POSIX regular expression

-O <file> control the order in which files appear in the output

--find-object <object-id>

look for differences that change the number of occurrences of the specified object

--diff-filter [(A|C|D|M|R|T|U|X|B)...[*]]

select files by diff type

--output <file> Output to a specific file

fatal: detected dubious ownership in repository at '/home/u/project'

To add an exception for this directory, call:

git config --global --add safe.directory /home/u/project

gen_git_hash.sh: line 12: __generated__/release/generated/git_commit_hash.cpp.tmp: Permission denied

mv: cannot stat '__generated__/release/generated/git_commit_hash.cpp.tmp': No such file or directory

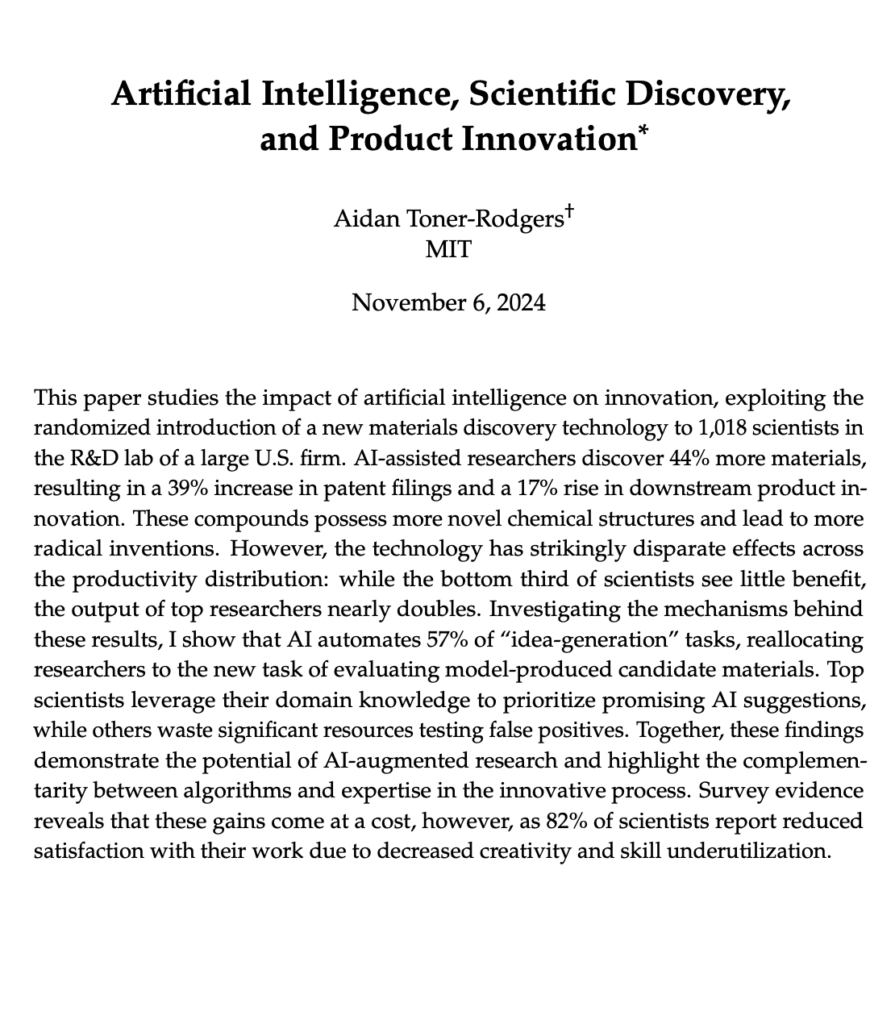

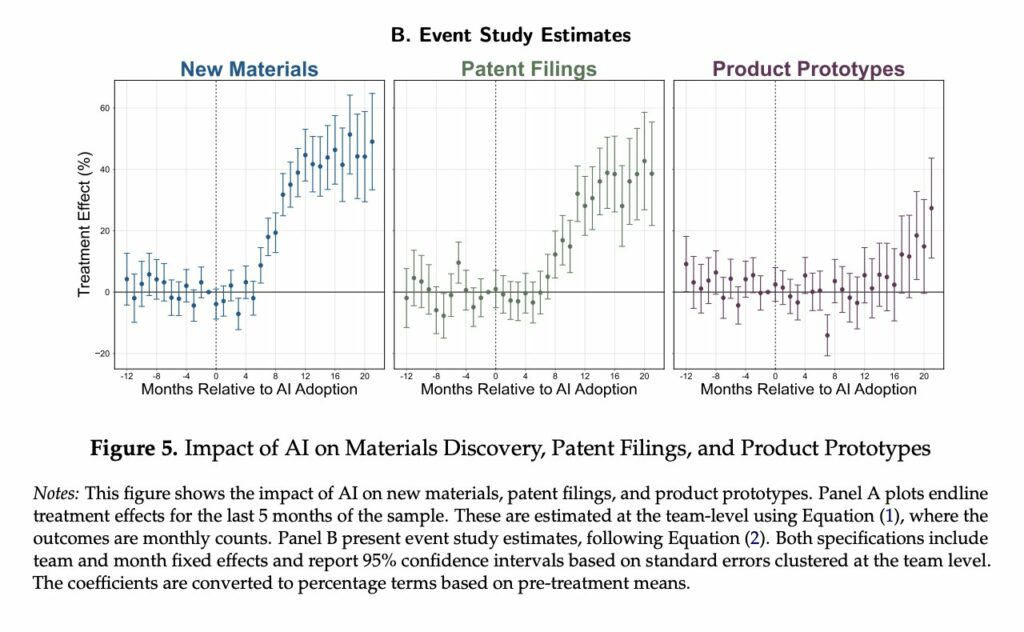

Sumber: https://x.com/calebwatney/status/1855016577646666123

Complete paper: https://aidantr.github.io/files/AI_innovation.pdf

Source:

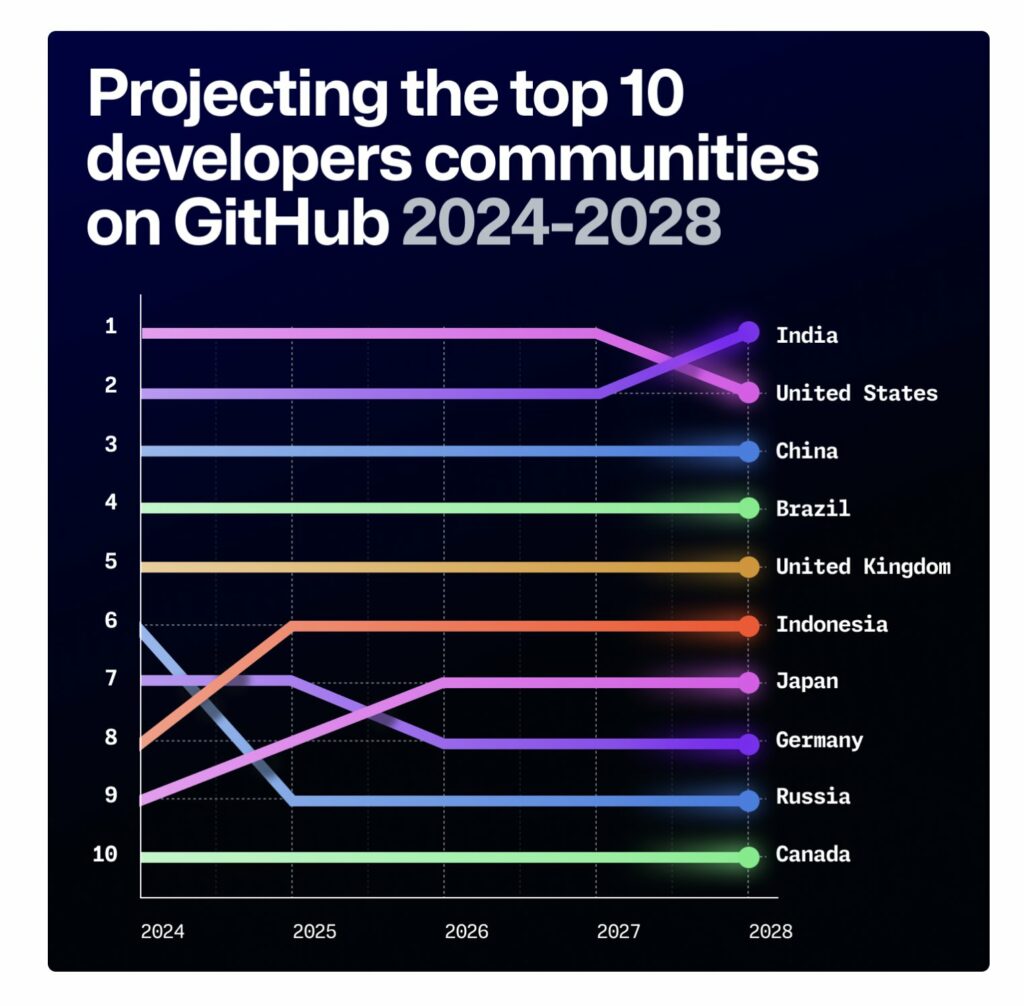

How to make graph like this in Python

I saw a nice graph from posting in https://x.com/junwatu/status/1851779704510124178/photo/1

Let’s ask Claude to imitate the graph.

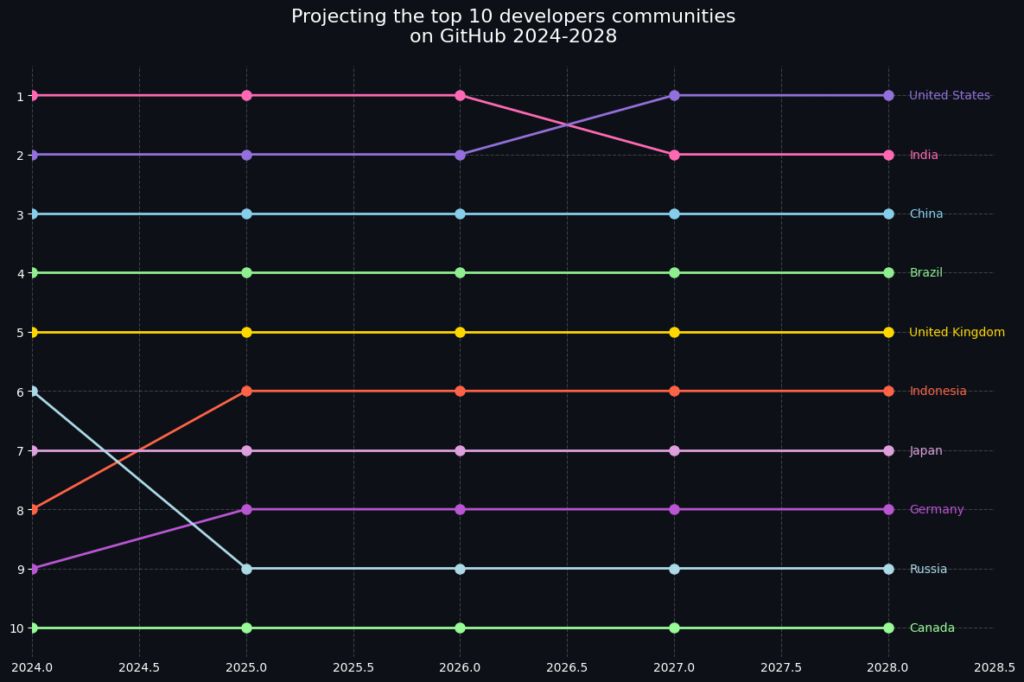

I’ll help you create a similar line graph using Python, primarily using matplotlib with a modern, dark theme that matches the style of the image.

This code creates a graph similar to the one shown in your image. Here are the key features:

import matplotlib.pyplot as plt

import numpy as np

# Sample data

countries = ['India', 'United States', 'China', 'Brazil', 'United Kingdom',

'Indonesia', 'Japan', 'Germany', 'Russia', 'Canada']

# Create sample data for demonstration

# In reality, you would have your actual data here

years = np.array([2024, 2025, 2026, 2027, 2028])

data = np.array([

[1, 1, 1, 2, 2], # India

[2, 2, 2, 1, 1], # United States

[3, 3, 3, 3, 3], # China

[4, 4, 4, 4, 4], # Brazil

[5, 5, 5, 5, 5], # UK

[8, 6, 6, 6, 6], # Indonesia

[7, 7, 7, 7, 7], # Japan

[9, 8, 8, 8, 8], # Germany

[6, 9, 9, 9, 9], # Russia

[10, 10, 10, 10, 10] # Canada

])

# Set the style

plt.style.use('dark_background')

fig, ax = plt.subplots(figsize=(12, 8))

# Custom colors for each line

colors = ['#FF69B4', '#9370DB', '#87CEEB', '#90EE90', '#FFD700',

'#FF6347', '#DDA0DD', '#BA55D3', '#ADD8E6', '#98FB98']

# Plot each line

for i, (country, color) in enumerate(zip(countries, colors)):

ax.plot(years, data[i], color=color, linewidth=2, marker='o', markersize=8)

# Add country name at the end of each line

ax.text(years[-1] + 0.1, data[i, -1], country, color=color,

verticalalignment='center', fontsize=10)

# Customize the plot

ax.set_ylim(10.5, 0.5) # Reverse y-axis and add some padding

ax.set_xlim(2024, 2028.5) # Add space for labels on the right

# Set title

plt.title('Projecting the top 10 developers communities\non GitHub 2024-2028',

fontsize=16, pad=20)

# Customize grid

ax.grid(True, linestyle='--', alpha=0.2)

# Customize axes

ax.spines['top'].set_visible(False)

ax.spines['right'].set_visible(False)

ax.spines['bottom'].set_visible(False)

ax.spines['left'].set_visible(False)

# Set y-axis ticks

ax.set_yticks(range(1, 11))

# Remove x-axis ticks but keep labels

ax.tick_params(axis='x', length=0)

# Set background color

fig.patch.set_facecolor('#0D1117')

ax.set_facecolor('#0D1117')

# Adjust layout

plt.tight_layout()

# Show plot

plt.show()

To use this code, you’ll need to have matplotlib installed. You can install it using:

pip install matplotlibYou can modify the data array to match your actual data points. Each row represents a country’s ranking over the years.

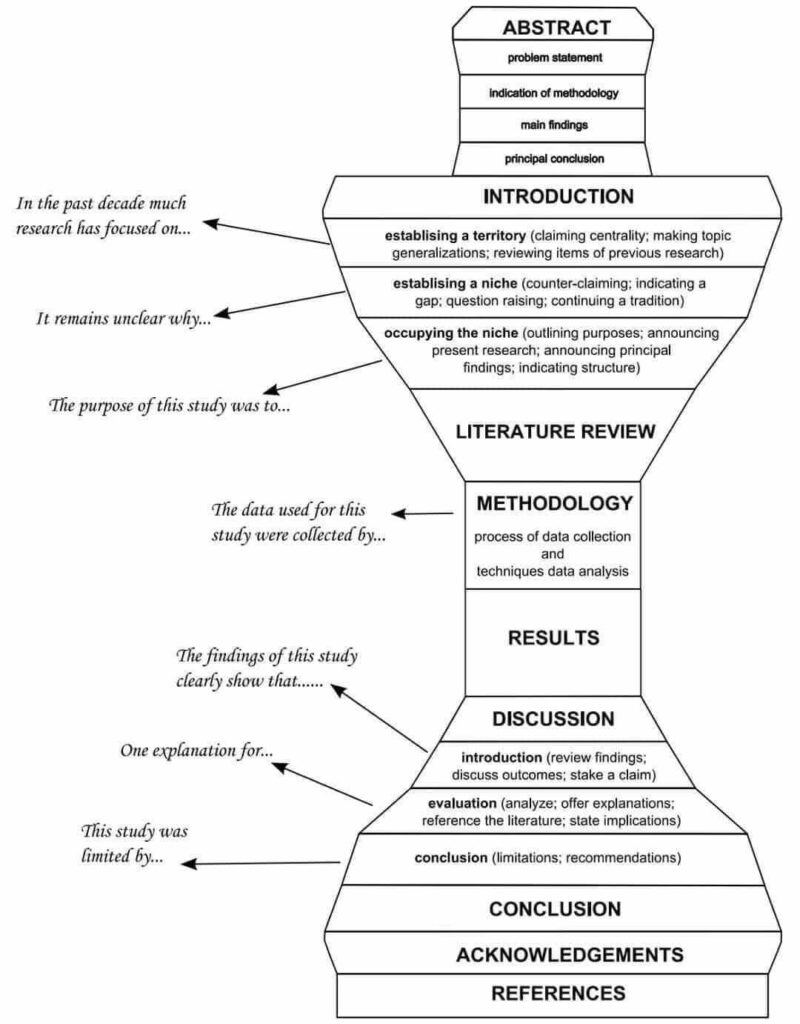

The art of writing literature review: What do we know and what do we need to know?

https://www.sciencedirect.com/science/article/pii/S0969593120300585

One Billion Row Challenge https://1brc.dev/