Berikut ini proses intalasi YoloX di Ubuntu 20.04.03

Tahap pertama adalah instalasi Ubuntu 20.04.03, sesuai petunjuk di https://ubuntu.com/download/desktop

Setelah itu lakukan proses update library di Ubuntu

apt update

apt upgradeInstall anaconda, petunjuknya dapat dilihat di https://docs.anaconda.com/anaconda/install/linux/

Buat environment khusus untuk yolox

conda create -n yolox python=3.7.6

conda activate yoloxProses berikutnya mengacu ke prosedur instalasi di https://github.com/Megvii-BaseDetection/YOLOX

Clone YOLOX

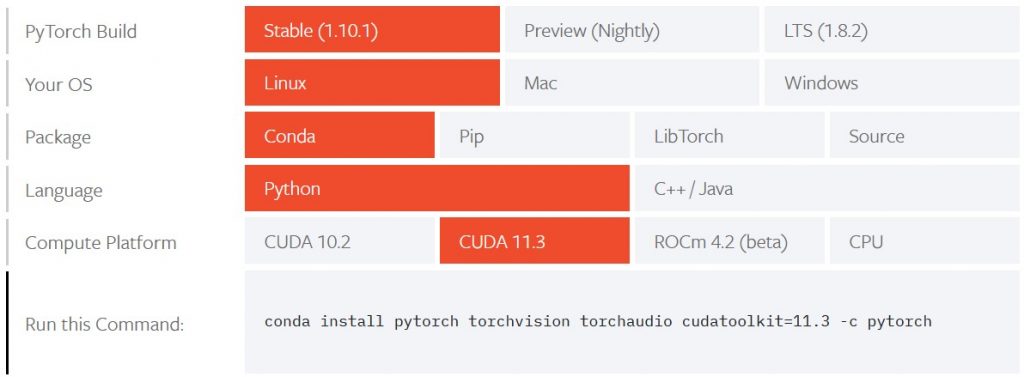

git clone https://github.com/Megvii-BaseDetection/YOLOXInstall pytorch, prosedur diadaptasi dari https://pytorch.org/get-started/locally/

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorchInstalasi YoloX dan Pycoco

pip3 install -U pip

pip3 install -r requirements.txt

pip3 install -v -e .

pip3 install cython

pip3 install 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'Proses instalasi selesai, selanjutnya ujicoba dengan gambar demo. Untuk itu download dulu bobot untuk COCO dataset.

wget https://github.com/Megvii-BaseDetection/YOLOX/releases/download/0.1.1rc0/yolox_s.pthLakukan proses inferensi dengan bobot YoloX-S

python tools/demo.py image -f exps/default/yolox_s.py -c yolox_s.pth --path assets/dog.jpg --conf 0.25 --nms 0.45 --tsize 640 --save_result --device cpuCara lain:

python tools/demo.py image -n yolox-x -c yolox_x.pth --path assets/dog.jpg --conf 0.25 --nms 0.45 --tsize 640 --save_result --device cpu