An interesting free ebook

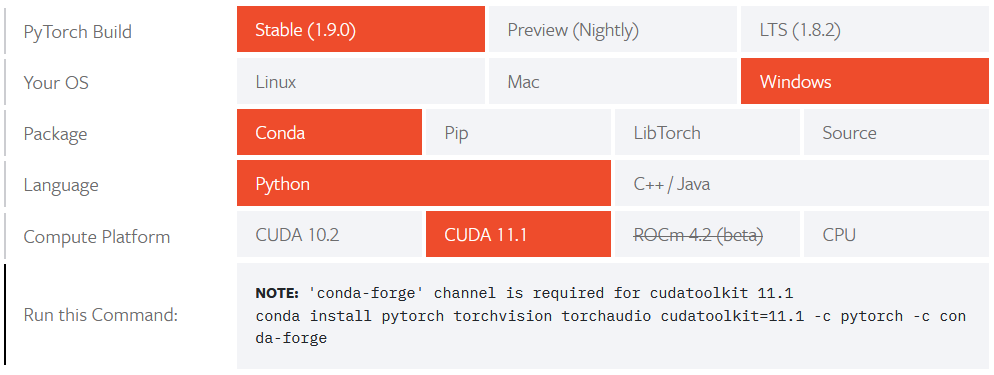

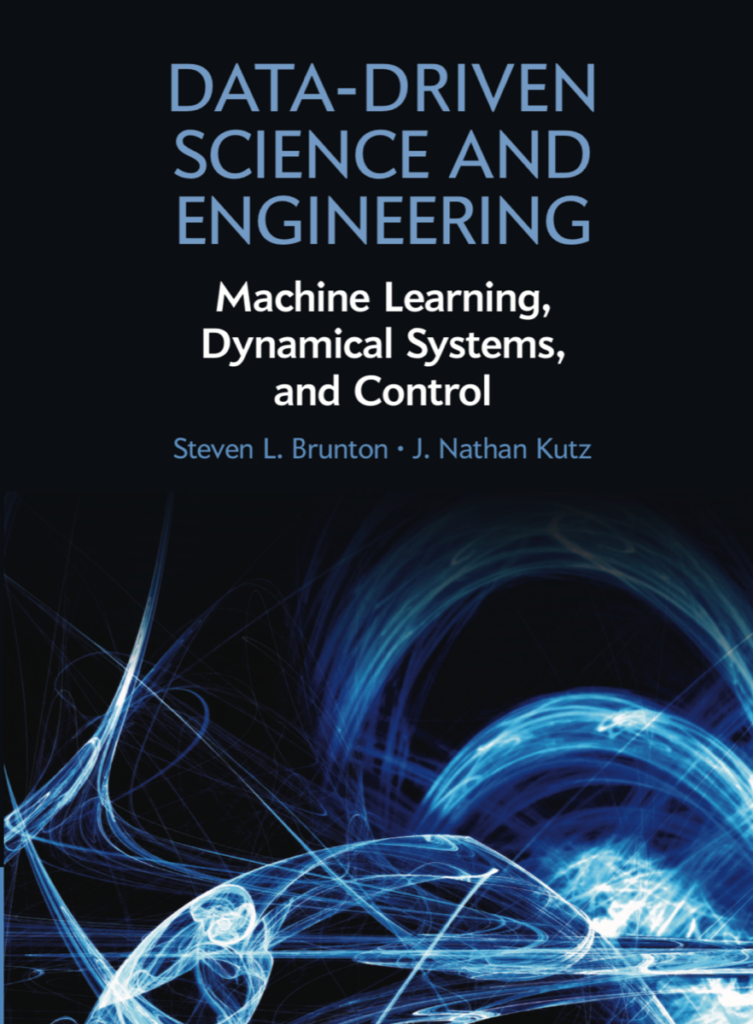

When we developed the course Statistical Machine Learning for engineering students at Uppsala University, we found no appropriate textbook, so we ended up writing our own. It will be published by Cambridge University Press in 2021.

Andreas Lindholm, Niklas Wahlström, Fredrik Lindsten, and Thomas B. Schön

A draft of the book is available below. We will keep a PDF of the book freely available also after its publication.

Latest draft of the book (older versions >>)

Table of Contents

- Introduction

- The machine learning problem

- Machine learning concepts via examples

- About this book

- Supervised machine learning: a first approach

- Supervised machine learning

- A distance-based method: k-NN

- A rule-based method: Decision trees

- Basic parametric models for regression and classification

- Linear regression

- Classification and logistic regression

- Polynomial regression and regularization

- Generalized linear models

- Understanding, evaluating and improving the performance

- Expected new data error: performance in production

- Estimating the expected new data error

- The training error–generalization gap decomposition

- The bias-variance decomposition

- Additional tools for evaluating binary classifiers

- Learning parametric models

- Principles pf parametric modelling

- Loss functions and likelihood-based models

- Regularization

- Parameter optimization

- Optimization with large datasets

- Hyperparameter optimization

- Neural networks and deep learning

- The neural network model

- Training a neural network

- Convolutional neural networks

- Dropout

- Ensemble methods: Bagging and boosting

- Bagging

- Random forests

- Boosting and AdaBoost

- Gradient boosting

- Nonlinear input transformations and kernels

- Creating features by nonlinear input transformations

- Kernel ridge regdression

- Support vector regression

- Kernel theory

- Support vector classification

- The Bayesian approach and Gaussian processes

- Generative models and learning from unlabeled data

- The Gaussian mixture model and discriminant analysis

- Cluster analysis

- Deep generative models

- Representation learning and dimensionality reduction

- User aspects of machine learning

- Defining the machine learning problem

- Improving a machine learning model

- What if we cannot collect more data?

- Practical data issues

- Can I trust my machine learning model?

- Ethics in machine learning (by David Sumpter)

- Fairness and error functions

- Misleading claims about performance

- Limitations of training data