About the Author

Cem Ünsalan, PhD, has over 20 years of experience working on signal processing and embedded systems. He received his doctorate from Ohio State University in 2003. He has published 23 papers in scientific journals and eight international books.

Duygun E. Barkana, PhD, has over 16 years of experience working on control and robotic systems. She received her doctorate from Vanderbilt University in 2007. She has published 22 papers in scientific journals and six international book chapters.

H. Deniz Gürhan is pursuing a PhD at Yeditepe University, where he received his BSc degree. He has over six years of experience working with guided microprocessors and digital signal processing.

Table of Contents:

1. Introduction

2. Hardware to be Used in the Book

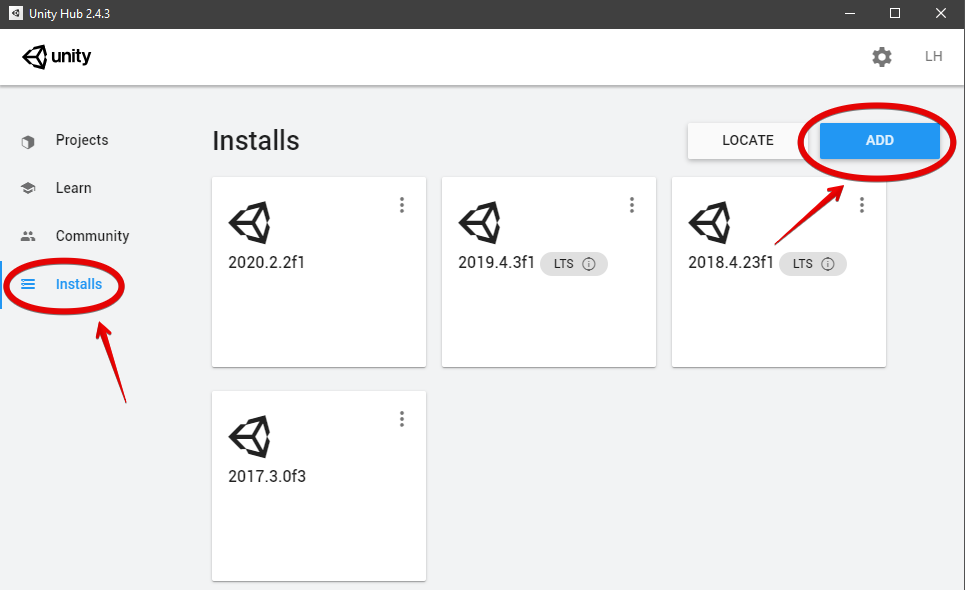

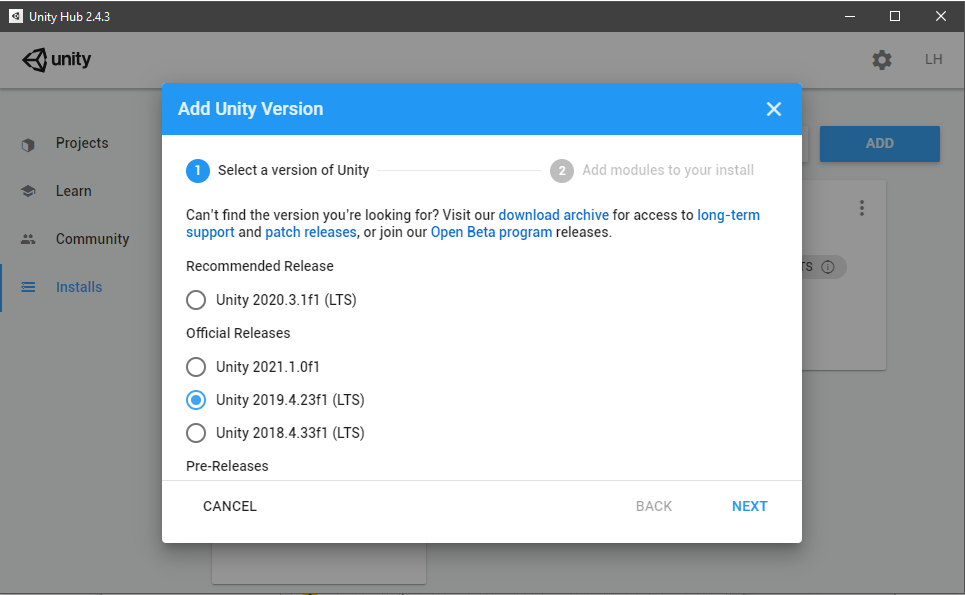

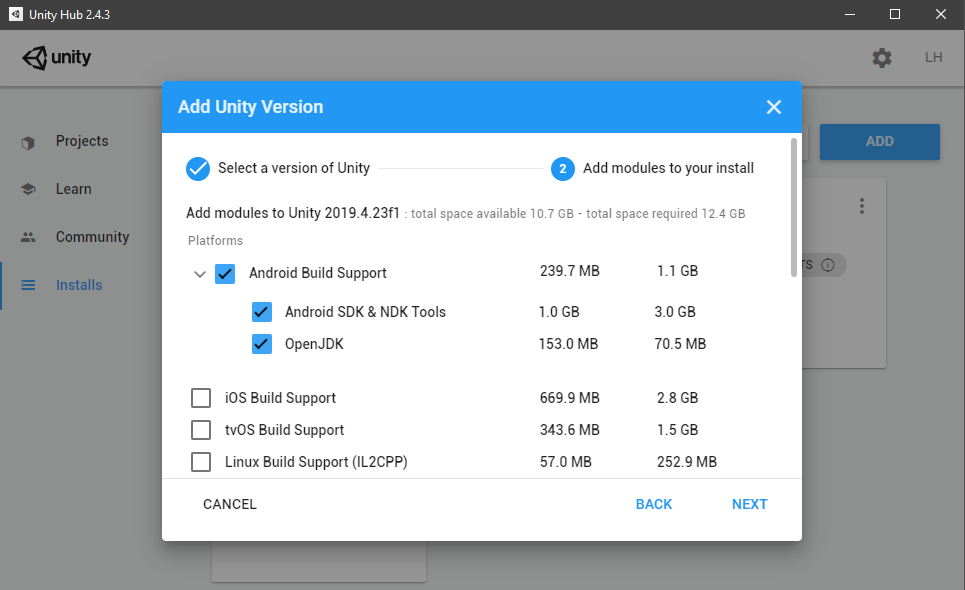

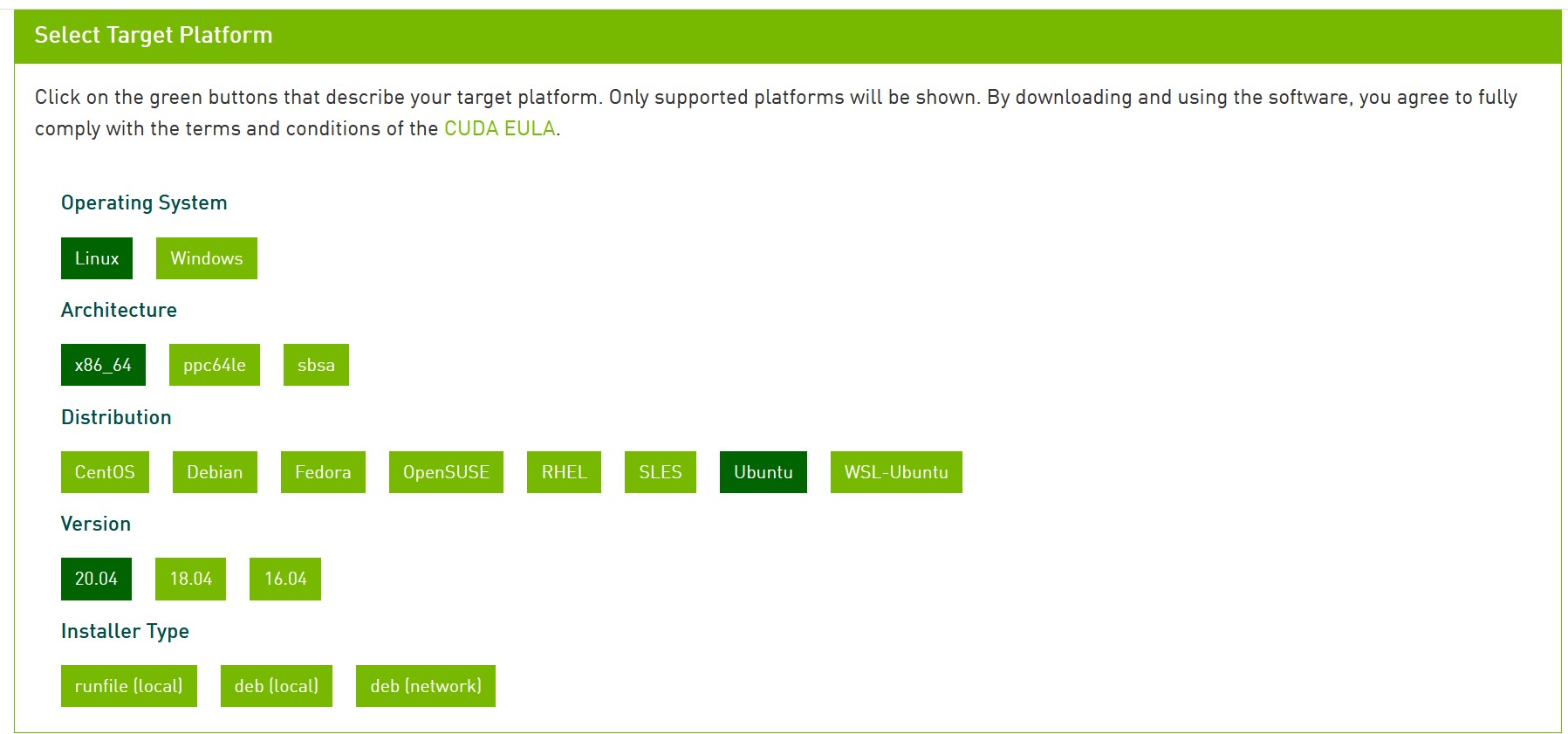

3. Software to be Used in the Book

4. Fundamentals of Digital Control

5. Conversion Between Analog and Digital Forms

6. Constructing Transfer Function of a System

7. Transfer Function Based Control System Analysis

8. Transfer Function Based Controller Design

9. State Space Based Control System Analysis

10. State Space Based Controller Design

11. Adaptive Control

12. Advanced Applications